Noindex Meta Tag: The Complete Guide for SEO and Website Optimization

- Home

- Knowledge Sharing

- Noindex Meta Tag: The Complete Guide for SEO and Website Optimization

Reading Time: 11-13 minutes

Key Takeaways

- The noindex meta tag tells search engines not to index a page, even though crawlers can still access it.

- Use noindex for thank-you pages, internal search results, login/account areas, duplicate or low-value pages, and staging environments.

- Robots.txt blocks crawling, while noindex removes a page from search results — both serve different purposes.

- Apply canonical tags when consolidating duplicates, but use noindex when a page should be completely excluded.

- Best practice is noindex, follow for most cases — it excludes the page but allows link equity to flow.

- Noindex can be added via a meta robots tag in the <head> or an X-Robots-Tag in HTTP headers.

- Implementation differs across CMS (WordPress, Magento, Drupal, Joomla, Shopify, Wix, etc.), but the principle remains the same.

- Overusing noindex can harm visibility; apply it selectively and verify results in Google Search Console.

Table of contents

Search engine optimization (SEO) is not just about helping pages rank, it is also about controlling which pages should not appear in search results. The noindex meta tag gives website owners precise control over indexation, allowing them to exclude pages that add little or no value in search.

This directive is particularly important for websites with large content libraries, eCommerce stores, or platforms that generate many low-value or duplicate pages. By applying noindex thoughtfully, site owners can keep their index lean, improve crawl efficiency, and ensure that only meaningful content is surfaced by search engines.

Google officially recognizes the robots meta tag and provides clear guidance on how it works in its documentation: Robots meta tag and X-Robots-Tag specifications .

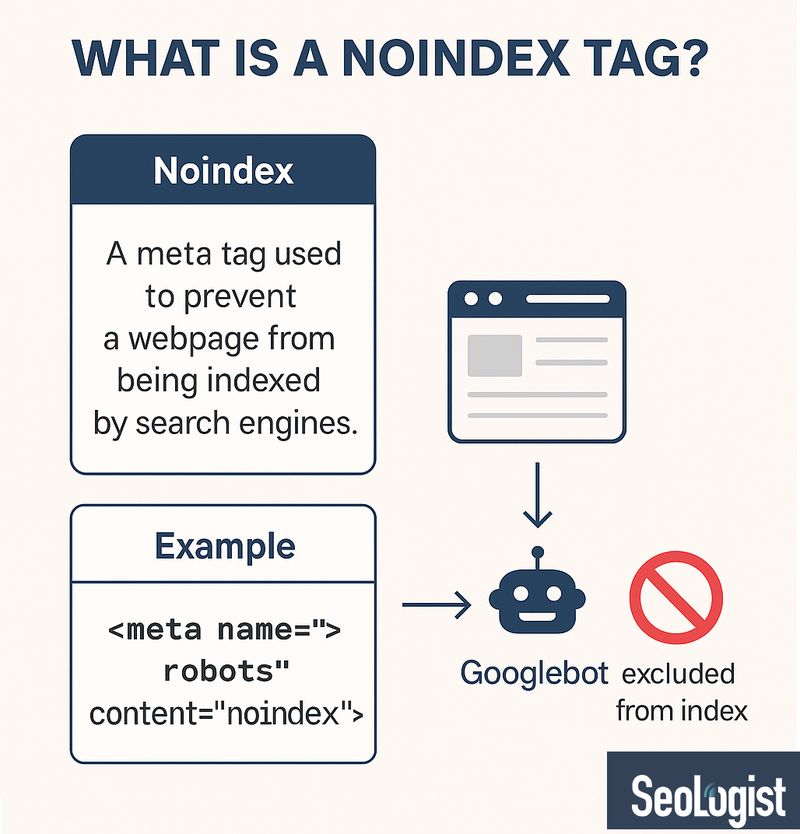

What Is a Noindex Tag?

The noindex meta tag (sometimes called meta robots noindex ) is a directive that tells search engines not to include a specific page in their search index. Unlike robots.txt, which prevents crawling, a noindex tag allows a crawler to access the page but ensures it will not appear in search results.

Here is the basic syntax:

<meta name="robots" content="noindex">

This tag can be combined with other directives such as nofollow:

<meta name="robots" content="noindex, nofollow">

Key Terms to Understand

- noindex – prevents a page from being indexed.

- nofollow – prevents search engines from following links on the page.

- meta robots tag – the HTML element that holds the noindex or nofollow directive.

- x-robots-tag – an alternative method for sending directives via HTTP headers.

“The noindex directive is one of the most powerful tools in technical SEO. It allows site owners to control exactly what appears in search results without cutting off crawlability.” — Mike Zhmudikov, SEO Director, Seologist

Why and When to Use Noindex Directives

Not every page on your website should rank in Google. Some are essential for user experience but have no business in the search index. If indexed, these pages can create noise, dilute authority, or cause duplicate content issues.

Common Use Cases

- Thank-you pages – post-conversion pages with no SEO value.

- Internal search results – thin and repetitive content.

- Login or account pages – private content not relevant to search.

- Duplicate versions of content – print-friendly formats or URLs with tracking parameters.

- Staging and test environments – non-production versions of a site.

Benefits of Using Noindex

- Focuses crawling on valuable content.

- Prevents unnecessary indexation (“index bloat”).

- Reduces risks of keyword cannibalization.

Pages That Typically Require Noindex

The table below highlights the most common types of pages where applying a noindex directive is either recommended or sometimes necessary, along with the reasoning behind each case.

| Page Type | Should You Use Noindex? | Reason |

|---|---|---|

| Thank-you pages | ✅ Yes | Conversion-only, no SEO value |

| Internal search results | ✅ Yes | Thin/duplicate content |

| Login/account pages | ✅ Yes | Private, user-specific |

| Duplicate parameter URLs | ✅ Yes | Avoids index bloat |

| Category pages in eCommerce | ⚠ Sometimes | Use case dependent |

| Blog posts & landing pages | ❌ No | Valuable for SEO |

In short, the noindex tag is not meant to hide important content but to filter out pages that add little or no value to search engines. By applying it carefully, you help Google and other crawlers focus on your most strategic pages while keeping irrelevant or redundant ones out of the index.

Noindex vs Robots.txt

At first glance, Noindex and Robots.txt may appear similar, but they serve different purposes in SEO and index management.

- robots.txt disallow prevents crawlers from accessing a page or directory altogether.

- noindex allows crawlers to access the page but ensures it will not appear in search results.

| Feature | Noindex Tag | Robots.txt Disallow |

|---|---|---|

| Blocks crawling? | ❌ No | ✅ Yes |

| Blocks indexing? | ✅ Yes | ❌ Not guaranteed |

| Works per page? | ✅ Yes | ⚠ Limited (per path) |

| Supports other directives (nofollow, etc.) | ✅ Yes | ❌ No |

| Recommended for thin/duplicate content | ✅ Yes | ❌ No |

According to

Google’s documentation

, robots.txt only controls crawling, not indexing. If you want to make sure a page does not appear in search results, a noindex directive should be used.

Robots.txt is best applied for restricting crawler access to unnecessary or sensitive resources, while noindex should be used when a page can be crawled but must stay out of the search index.

Noindex vs Canonical

Another frequent source of confusion is the difference between noindex and canonical tags . Both are designed to deal with duplicate or overlapping content, but their purpose is not identical.

- Canonical signals to search engines which version of a page should be treated as the main one.

- Noindex excludes a page entirely from the index.

| Feature | Canonical Tag | Noindex Tag |

|---|---|---|

| Keeps a page in the index? | ✅ Yes (main version) | ❌ No |

| Passes link equity? | ✅ Yes | ❌ No |

| Best for duplicates? | ✅ Yes | ✅ Yes, when the page should be excluded entirely |

| Can be combined with others? | ✅ Yes (with hreflang, etc.) | ✅ Yes (with nofollow) |

Example canonical tag:

<link rel="canonical" href="https://www.example.com/main-page/">

So, use canonical when you want to consolidate authority between similar pages, and apply noindex when the page should be completely excluded from search visibility.

How to Implement a Noindex Tag

There are several ways to add a noindex directive , and the choice depends on the type of content and platform. The most common is by using the meta robots tag directly in the HTML code of a page.

1. Adding a Meta Robots Tag in HTML

To exclude a page from search results, you need to insert the following line of code into the <head> section of your HTML:

<head>

<meta name="robots" content="noindex">

</head>

Important: The tag must always be placed inside the <head> of the page, not in the <body>. If it is placed incorrectly, search engines may ignore it.

2. Combining Noindex with Nofollow

Sometimes you may want to exclude a page from indexing and prevent search engines from following links on it. In this case, you can use:

<meta name="robots" content="noindex, nofollow">

- noindex means the page will not appear in search results.

- nofollow means that all links on this page will not be followed by crawlers, and no link equity will be passed.

This combination is typically used for low-value pages such as login portals, checkout steps in eCommerce, or temporary test pages.

If you only want to exclude the page but still allow crawlers to follow the links on it, use:

<meta name="robots" content="noindex, follow">

This is useful for category or filter pages in online stores: the page itself should not be indexed, but the product links should still be discoverable.

3. Using the X-Robots-Tag via HTTP Headers

For non-HTML content (like PDFs, images, or other files), you cannot insert a meta tag into the file itself. Instead, you use the X-Robots-Tag directive in the server’s HTTP response header.

Example:

X-Robots-Tag: noindex

This tells search engines that the file should not be indexed.

Key Considerations When Implementing Noindex

- Always double-check that the page is not blocked in robots.txt . Otherwise, Googlebot won’t be able to see the noindex directive.

- Use “noindex, follow” when you want to keep link equity flowing to other parts of your site.

- Reserve “noindex, nofollow” for truly unimportant or sensitive pages.

- After implementation, use Google Search Console → URL Inspection Tool to confirm that Googlebot can see the noindex tag.

So, the best practice is to place a meta robots noindex directive in the <head> of the page when dealing with standard HTML content, and to use X-Robots-Tag headers for files and non-HTML resources. This way you ensure that only the right content makes it into Google’s index.

Noindex in Popular CMS and Website Platforms

Adding a noindex tag is straightforward in most content management systems (CMS). The process differs depending on the platform, but the goal is the same — insert a meta robots directive into the page’s <head> section or configure it via built-in SEO tools or plugins.

WordPress

-

SEO Plugins (Yoast, RankMath, All in One SEO):

Most WordPress websites rely on SEO plugins. Within the page or post editor, you can find an “Advanced” tab where you simply toggle “ Allow search engines to show this Page in search results? ” → No . This automatically inserts: <meta name="robots" content="noindex, follow"> -

Manual Method:

If you prefer manual coding, you can edit the theme’s header.php file and insert the <meta> tag into the <head>.

Best use: thank-you pages, test posts, or private content.

Magento

- Built-in Functionality: Magento provides options in its admin panel to set meta robots for categories, products, and CMS pages. For instance, you can choose “NOINDEX, FOLLOW” for filtered navigation or duplicate categories.

- Extensions: SEO extensions allow bulk noindex management for layered navigation pages, out-of-stock products, or search results.

Best use: avoid index bloat from layered navigation and duplicate categories.

Drupal

- With the Metatag module , you can configure robots directives for individual content types or pages. Once enabled, you select noindex or noindex, follow in the module’s UI, and it generates the tag automatically.

Joomla

- SEO extensions like sh404SEF or EFSEO provide direct options to assign noindex on specific articles or menus.

OpenCart

- Requires either template editing (inserting <meta> tags manually in the header template) or SEO modules from the marketplace that add noindex controls.

Tilda, Wix, Shopify (Website Builders)

- Wix : Pages have an SEO tab where you can disable indexing, automatically adding the noindex directive.

- Shopify : Some themes allow editing the theme.liquid file to place noindex tags, while SEO apps simplify this process.

- Tilda: The page settings panel includes an “Indexing” option to disable indexing with a single click.

Regardless of whether you use WordPress, Magento, or a website builder like Shopify or Wix, the principle is the same: you either configure noindex through an SEO plugin/module or insert the <meta> tag into the <head>. The most important step is to confirm with Google Search Console that the directive is being read correctly.

SEO Best Practices for Noindex Usage

While the noindex tag is a powerful tool, it should be used with precision. Overusing it can hide valuable pages from search engines, while underusing it can lead to duplicate content and index bloat. The following best practices outline how to apply noindex effectively.

For eCommerce Sites

- Faceted navigation and filters: Filtering by colour, size, or price often generates thousands of duplicate or near-duplicate pages. Applying noindex, follow prevents these from cluttering the index while still allowing crawlers to follow product links.

- Out-of-stock products: Instead of letting them remain indexed, use noindex if the product is permanently unavailable or has been discontinued. For products that will be restocked, a canonical to the main version may be more appropriate.

- Internal search results: In most cases, these pages should be noindexed, since they usually provide little SEO value and may overload the index.

For Large Content Websites

- Archives and tag pages: Blogs and news portals often create thousands of date-based or tag archive pages. Unless these pages provide unique value, they should be noindexed to prevent unnecessary duplication.

- Thin author pages: Profiles that contain only a name, image, and brief bio may not warrant indexation. Applying noindex keeps the focus on content-rich pages.

- Low-quality duplicates: Printable versions, parameter-based URLs, or session-ID pages should not compete with primary content.

General Rules

- Prefer noindex, follow over noindex, nofollow unless you explicitly want crawlers to ignore all links on the page.

- Avoid combining robots.txt disallow with noindex for the same page — crawlers must access the page to see the directive.

- Regularly audit your website using Google Search Console → Page indexing report to ensure that valuable pages remain indexed and non-essential ones are correctly excluded.

In summary, the best approach is to apply noindex selectively — only to pages that add no search value. This strategy helps maintain a clean and efficient index, reduces duplicate content, and ensures your crawl budget is focused on the pages that truly matter.

Common Mistakes and Troubleshooting

Even when applied correctly, the noindex directive can sometimes lead to confusion. Below are the most frequent mistakes and how to resolve them.

1. Page Still Appears in Search Results After Adding Noindex

- Cause: It can take time for Google to recrawl and process the directive.

- Solution: Use the URL Inspection Tool in Google Search Console to request reindexing. Be patient — changes may take days or weeks.

2. Using Robots.txt and Noindex Together

- Cause: If a page is blocked in robots.txt, crawlers cannot access it to see the noindex directive.

- Solution: Do not block the page in robots.txt if you also want to use noindex. Let it be crawled, then excluded via the meta tag.

3. Overusing Noindex

- Cause: Applying noindex too broadly can hide important content, reducing organic visibility.

- Solution: Audit regularly to ensure only low-value or duplicate pages are excluded.

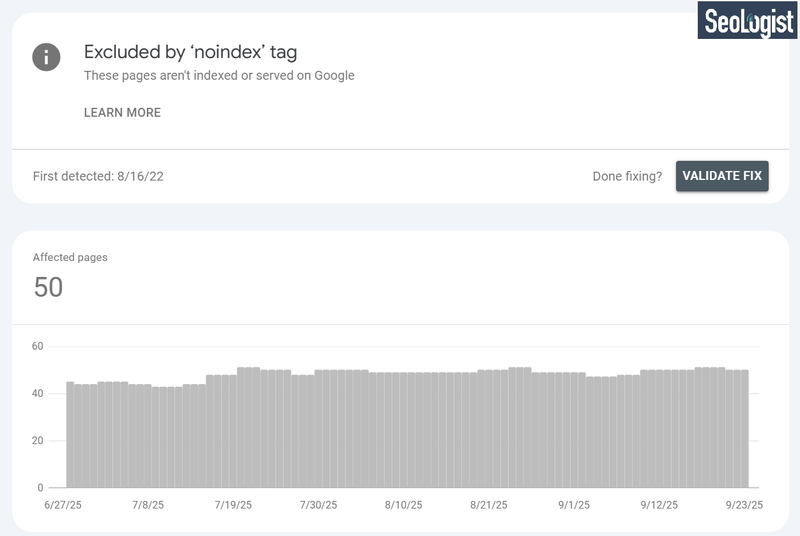

4. Search Console “Excluded by noindex tag” Status

- Cause: This status simply confirms that Google detected the directive and excluded the page.

- Solution: Review these reports to verify that only intended pages are excluded.

Overall, troubleshooting noindex usually comes down to understanding crawl behaviour and making sure directives are visible to Googlebot.

Final Thoughts on Using Noindex for SEO

The noindex directive is one of the most precise tools in technical SEO. When used correctly, it helps maintain a clean, efficient index by keeping low-value or duplicate pages out of search results. This ensures that your crawl budget is spent on the content that matters most, while unnecessary pages stay hidden.

The key is balance: apply noindex to thank-you pages, faceted navigation, and thin duplicates, but avoid overusing it on potentially valuable content. Regular audits with Google Search Console can help confirm that your implementation is working as intended.

Need expert help with technical SEO and index management?

Contact a Seologist to get a tailored SEO strategy for your website.

FAQs About Noindex Meta Tag

What is a noindex tag?

A noindex tag is a directive placed in the HTML or HTTP header that tells search engines not to include the page in search results.

How do I noindex a page in WordPress?

Use SEO plugins such as Yoast or RankMath. In the page settings, set the option to “No” for “Allow search engines to show this Page in search results.” This automatically generates a noindex meta tag.

Can I use noindex and nofollow together?

Yes. A common example is:

<meta name="robots" content="noindex, nofollow">

This excludes the page from search results and also prevents crawlers from following its links.

What is the difference between disallow and noindex?

- Disallow (robots.txt): Blocks crawling but does not guarantee exclusion from the index.

- Noindex: Ensures exclusion from search results while still allowing crawling.

Does noindex affect rankings of other pages?

No. It only applies to the page where it is set. However, if you use “noindex, nofollow,” internal links on that page will not pass equity.

How can I check if a page is excluded by noindex?

Use the Page indexing report in Google Search Console. Pages excluded by noindex will appear under “Why pages aren’t indexed → Excluded by ‘noindex’ tag.”