17 Common SEO Technical Issues and Ways to Solve Them

- Home

- Knowledge Sharing

- 17 Common SEO Technical Issues and Ways to Solve Them

Everything about your online presence affects how the major search engines choose to rank your pages. That applies to the home page of your website, your blog posts, your landing pages, and even the social media posts that you set for the search engines to index. The goal is to use the best practices and ensure all the information you have online garners favour from Google and other prominent engines. Without it, people are less likely to find out what a gem of a business you operate.

Understanding the basics of search engine optimization is not enough. You also need to understand what sort of technical SEO issues can arise and what you can do about them. In some cases, the fixes are simple and relatively quick. Others are more involved and may require help from a designer or a true SEO expert .

While more issues are likely to develop as the beast known as the Internet continues to evolve, here are 17 of the most common SEO problems. Be aware of them and know what to do if you stumble across one or more of these issues.

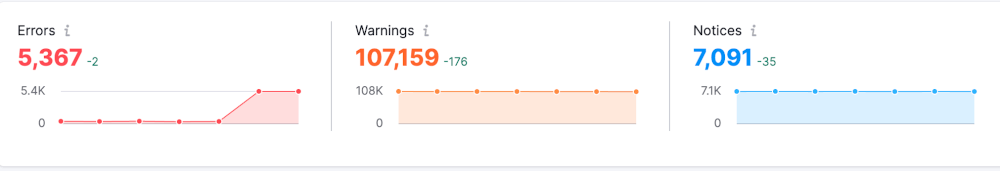

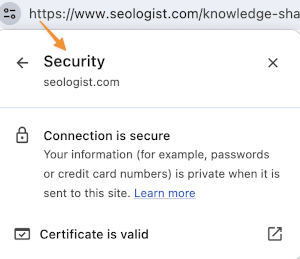

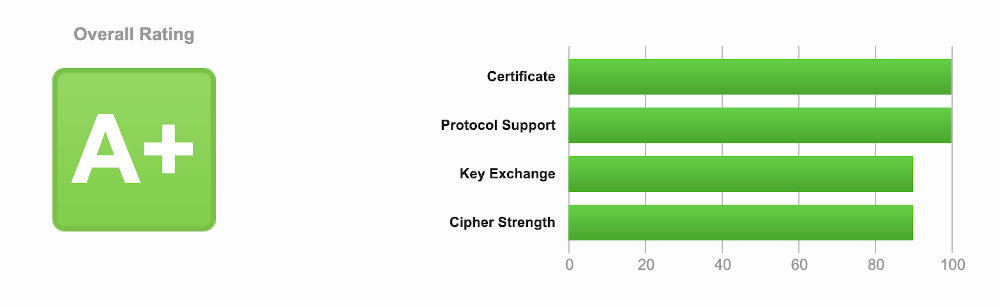

SEO Issue #1: How Secure Are Your Pages?

Security is always a priority. There are obvious reasons, like you don't want hackers collecting proprietary data any time they like. It's also a good idea in terms of being able to tell customers that your site is secure. What you many not realize is that a lack of security also drives down your SEO rankings.

How do people know if your site is secure? The URL tells them right off the bat. Simply put, if the URL begins with "http" rather than "https" they know there's a problem.

The search engines know too. As the crawlers evaluate pages, they do look at the URL. Similar pages that are https secure will gain more attention than your sad http page.

How do you fix things? Use a Certificate Authority to obtain what's known as an SSL certificate. It costs very little and the process of installing the certificate takes no more than a few minutes. Once it's done, consumers and search engines alike will know your site is secure.

SEO Issue #2: Improper Page Indexing Or No Indexing At All

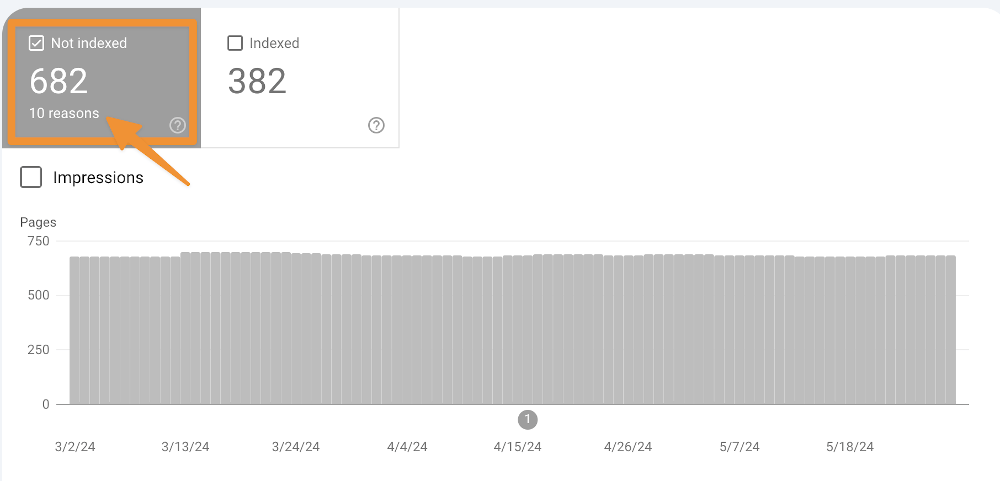

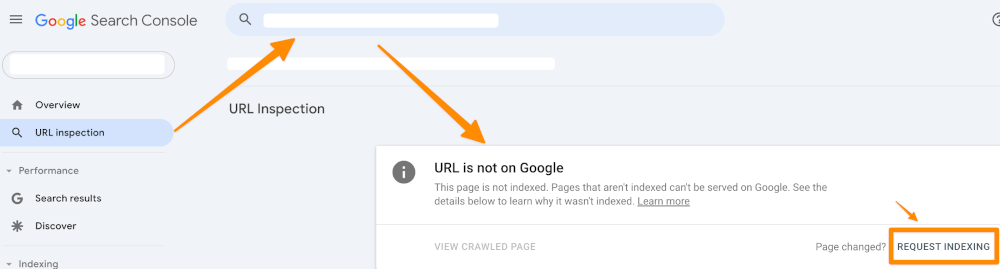

Indexing issues are another example of common SEO problems. Simply put, a page that's not indexed will not show up in any search engine results. It could be the most helpful and informative page online, but no one will be able to find it unless you give them the URL.

Perhaps your pages are indexed, but they are not indexed properly. They may still show up in searches, but not necessarily the ones where you need them to be viewable. That's only marginally better than not showing up at all.

Fortunately, it's possible to fix both issues. Begin by submitting the URL for each of your pages to Google and the other major search engines. Make sure your page descriptions are spot on and include keywords that are directly relevant to the page content. You'll also want to make sure that you haven't accidentally initiated what's known as a " NOINDEX " meta tag.

There may be the need to do a little cleanup. That's especially true if you have older pages with similar URLs that were indexed a long time ago. Most search engines have tools that administrators can use to de-index old pages that no longer exist.

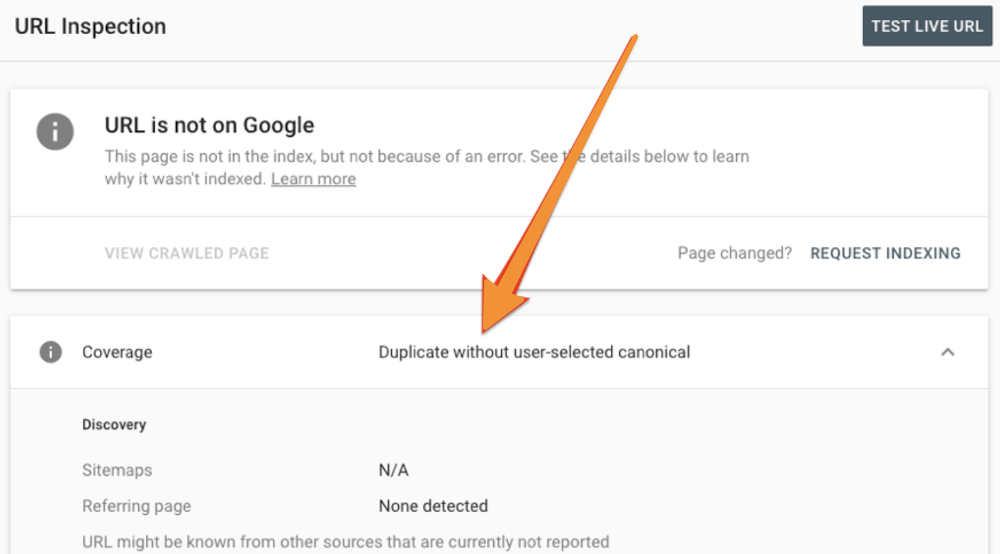

SEO Issue #3: Duplicate Content: A Recipe for the Old SEO Smackdown

There was a time when duplicate content on a website was not exactly a hindrance. That was also a time when conventional wisdom declared that more repetitions of the same keyword was a good thing. That sort of activity doesn't fly these days.

With the current algorithms, content that's the same as on other pages can be confusing for the search engine crawlers. There's already something similar indexed. Even if the page does end up being indexed, it's likely to be much lower in the rankings.

Another point that escapes some people is that duplicate content, assuming it does get indexed -tends to dilute the rankings for all pages involved. The result is that none of them perform as well as they should.

To fix the duplicate content error on the website:

- Identify pages with duplicate content.

- Make unique changes to each of these pages to differentiate them.

- Set canonical tags on pages to indicate the preferred version to search engines.

- Use redirects (e.g., 301 redirects) to consolidate duplicate pages into one.

- When creating new content, focus on uniqueness and avoid duplicating existing material.

SEO Issue #4: Outdated Content

Another problem that sometimes goes hand in hand with duplicate content is outdated content. You know what that refers to: there are some pages that contain time-sensitive data that's long been replaced by newer studies, statistics, and other data. Even so, you've never got around to updating the information .

How does this create issues with your SEO efforts? The major search engines are always crawling for the most accurate and the most current data. It's all part of meeting the needs of consumers who are looking for information. If the data on your pages is so obviously old news that even the crawlers pick up on the fact, your rankings definitely decrease.

The most practical way to deal with outdated content is to update it. Replace those old charts from a decade ago with new ones that provide information related to this year or at least the most recently completed year. Quotes from years past that identify "new" products or ideas also need updating. If the information is no longer relevant to today, it has to go. No exceptions.

SEO Issue #5: Unpopular Keywords (Including Long-Tail Keywords)

While you're doing something about the duplicate or outdated content, take a fresh look at the keywords and keyword phrases incorporated into the text. Are they still popular today or has the sun set on their usage in searches? If it's the latter, you need to invest some time in finding current words and phrases that can take the place of keywords, including what's known as long-tail keywords that are no longer popular.

This is actually a lot easier than you may think. Most search engines provide tools that make it easier to find popular keywords and phrases that people are using today. Google easily has some of the best keyword generation tools available. Best of all, those tools are available for use at no cost.

While the way keywords attract attention has changed, there's no doubt that they still make a difference when the crawlers evaluate your pages. Opting for using relevant keywords that are commonly used today will help your pages to rank a little higher. Even if the keywords you have in place are still somewhat popular, finding ways to logically insert a couple of more popular ones is likely to help your rankings a little.

SEO Issue #6: Poor Display on Mobile Devices

Those seeking to provide a good page experience should take a holistic approach. Enhancing display on mobile devices is a vast field where new ideas and techniques are constantly emerging. Some of the latest trends in this area include:

- Adaptive Design: Creating websites that can adapt to different screen sizes of mobile devices, providing an optimal user experience.

- Page Loading Speed: Utilizing technologies such as AMP (Accelerated Mobile Pages) and image optimization to reduce page load times on mobile devices.

- Mobile-First Fonts: Choosing legible fonts that display well on mobile screens, considering their limited space.

- Mobile Interactive Elements: Designing interactive elements that are easily accessible and user-friendly on mobile devices, such as mobile menus and forms.

- Voice Search and Control: Integrating voice search and control to enhance accessibility and usability on mobile devices.

- Scrolling Optimization: Developing content and interfaces optimized for vertical scrolling on mobile devices, considering user preferences and behaviors.

- Mobile Applications Usage: Developing mobile applications that offer an enhanced user experience and additional features despite the limitations of a web browser.

These are just a few examples of recent ideas in improving mobile displays, which are constantly evolving and expanding.

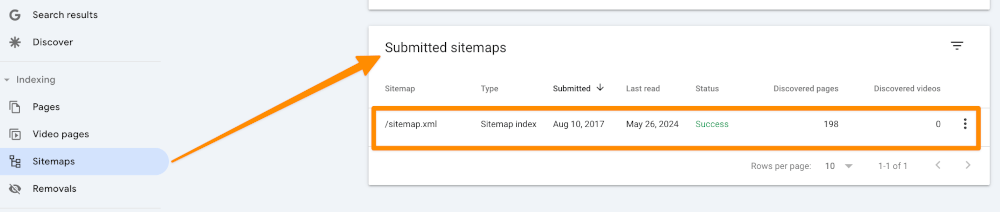

SEO Issue #7: Where are the XML Sitemaps?

A properly configured eXtensible Markup Language sitemap is essential if you want your pages to rank higher in search engine results. An XML sitemaps aid the crawlers in understanding what your pages are all about, allowing them to have a better idea of what to pick up on and how to rank each page. When the sitemap is set up incorrectly, it will hamper rather than help the indexing process.

How do you know if your XML sitemaps are working as they should? Google offers the best way to check. Type your domain name into the search field, adding "/sitemap.xml" after the URL. If you don't have a sitemap at all, you'll get a screen with an error. If you do have one, it will be easy to see the setup and have a professional determine if everything is in order.

SEO Issue #8: Robots.txt Files

Web developers understand the importance of a properly structured robots.txt file. They also understand how not having one wreaks havoc from an SEO standpoint.

- Avoid relying on default robots.txt files provided by content management systems (CMSs) , as they might overly restrict access to files or resources that don't need to be blocked. Instead, customize your robots.txt file based on your website's specific needs, preferably with input from an experienced SEO professional who understands your site's structure.

- Be cautious when blocking canonicalized URLs in your robots.txt file . Canonical tags are commonly used to manage duplicate content issues, but if these URLs are blocked, search engines won't be able to crawl the page to recognize the canonical tag, potentially impacting indexing and search visibility.

- Don't use robots.txt to noindex pages. While it was a common practice in the past, Google no longer follows noindex directives found in robots.txt files as of September 1, 2019. Instead, use page-level meta-robots noindex tags to prevent specific pages from being indexed.

- Similarly, avoid blocking URLs with a meta-robots NOINDEX directive in robots.txt . If both the meta-robots noindex tag and robots.txt block are applied to the same URLs, search engines may still index those pages because they won't crawl them to see the directive.

- Pay attention to case sensitivity in your robots.txt file. Remember that it's case-sensitive, so using the wrong case can result in unintended consequences. For instance, "Disallow: /Folder" won't block a folder named "/folder." Be precise to ensure the correct directives are applied.

Properly managing your robots.txt file is crucial for effective search engine optimization (SEO) and ensuring the visibility of your website's content. By avoiding common mistakes, you can help search engines crawl and index your site appropriately.

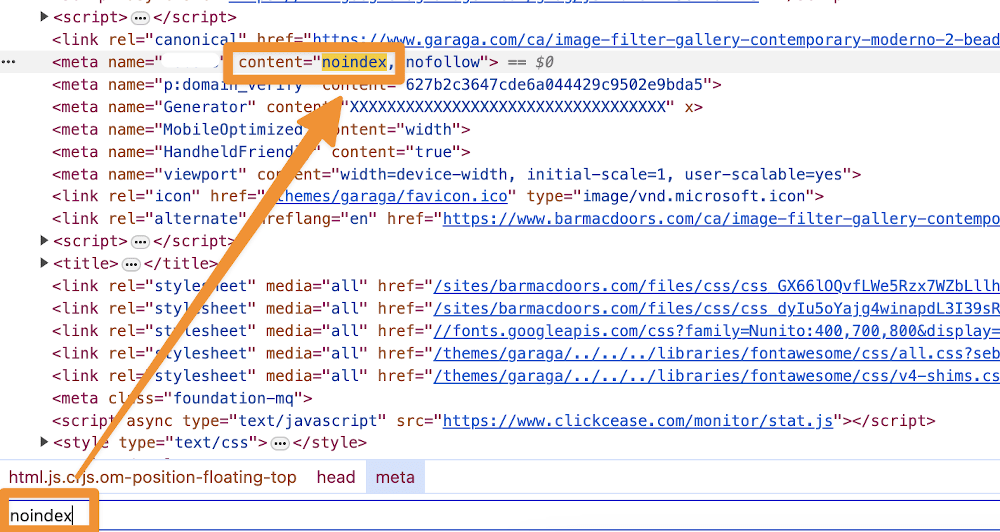

SEO Issue #9: An Improperly Functioning NOINDEX Tag

The purpose of NOINDEX tags is to indicate which pages are more important than others. The search engine crawlers pick up on that and take the information into consideration when ranking those pages. This is helpful with any website, but is especially important when it comes to blogs.

So what happens if the NOINDEX tag is not set up properly? The outcome can be that all of your pages with a certain configuration are no longer showing up in the search engine's index. For all practical intents and purposes, those pages don't exist, at least not within that particular search engine.

During the development stage, all or most of your pages may carry a NOINDEX tag. However, those tags must be removed once the pages are complete and ready for the world to see. The only way to ensure that's done is go back and review each page.

Once again, Google makes it easy. Right click on the page you want to check. In the menu that appears, click on "view source code." Using the find command, search for any lines that say "NOINDEX" or "NOFOLLOW".

Assuming that you do find one of these phrases, check with the developer and find out if the tag was left for some reason. If not, the developer can alter the line so that the crawlers will pick up and index the page. It may also be possible to simply remove the tag.

SEO Issue #10: Load Times Are Too Long

Why does the load time matter? Internet users don't have a lot of patience with slow loading. If they perceive that it's taking too long, they'll assume something is wrong with the site, back away, and pull up the next page in the search engine results. Think of that sort of thing as a lost opportunity.

We have gathered several key points for you that will help reduce loading times.

- Web Vitals: Google has introduced new metrics called Web Vitals, including Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS), which measure user experience on web pages. These metrics have become ranking factors in Google search results.

- AMP (Accelerated Mobile Pages): Google continues to develop and support AMP, a technology aimed at speeding up the loading of mobile pages. It offers tools and resources for creating AMP pages that load faster and provide a better user experience.

- HTTP/2 and HTTP/3: Transitioning to the new HTTP/2 and HTTP/3 protocols can improve the loading speed of web pages by facilitating more efficient data transfer between the server and client.

- Server-Side Rendering (SSR): Using SSR instead of client-side rendering can reduce the load time of web pages as pages can be generated on the server and sent to the client already prepared for display.

- Image and Resource Optimization: Development continues on methods and tools for optimizing images, CSS, JavaScript, and other resources on web pages to reduce their size and speed up loading times.

- Caching and Content Delivery Networks (CDNs): Utilizing caching and CDNs can help accelerate the loading of web pages by placing their content closer to end-users and reducing the load on servers.

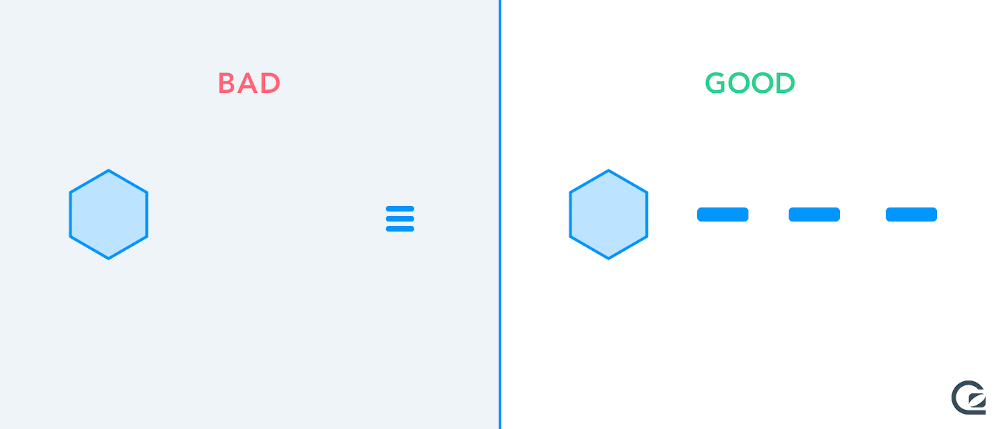

SEO Issue #11: Confusing Navigation

Another technical issue that will derail your SEO efforts is navigation issues with the site. While the first thought is that poor navigation affects your visitors only, it also has a lot to do with earning and maintaining better rankings.

It's imperative that navigating from one page to another has to be simple. Ideally, all it requires is a single click. Visitors should always be able to click on something to get back to a home page. If there's no easy way to get from one page to the next one, the chances of new visitors returning are somewhere between slim and none.

As it relates to rankings, crawlers are on the lookout for this type of issue. When it's more difficult to move between pages, your site is likely to be considered one with inferior authority. While the pages are still indexed, they won't place very high in rankings. That's true even if you use keywords that are directly related to the page content and purpose.

The fix for this type of problem could be nothing more than restructuring each page so that there's an easily spotted menu or set of links to get around the site. They can be in a sidebar or along the bottom of the page. The point is to make them easy for visitors to find. Satisfy the visitors and the crawlers are likely to rank the site as one with more authority.

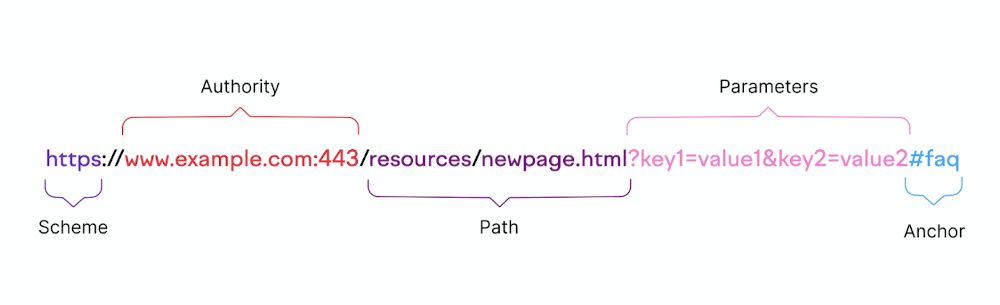

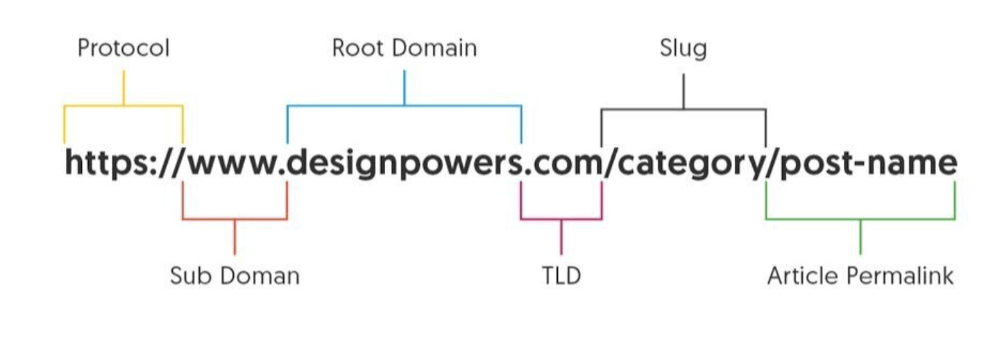

SEO Issue #12: Unfriendly URL Configuration

You already know how important it is to choose a URL that's simple and easy for people to remember. You also want something that looks good on a business card or any type of digital correspondence. The question that remains is how well you use that same strategy when it comes to naming all of the other pages associated with your site.

Ideally, you don't need pages with unfriendly URLs that contain a string of number and symbols. What you do want is URLs that provide instant insight into what type of content or other information will be found on that page. That's not only important to anyone who visits your site; like every other aspect of the page, search engine crawlers are looking for anything that helps them rate and rank those pages.

An example of an unfriendly URL on a garden site page could be something along the lines of the main URL followed by something like "index.php?=46832." Who would want to remember that? And as it relates to SEO, what does it tell the crawler about the page content?

Consider how much cleaner and easier it would be if the data following the main URL was something like "roses.yellow." It's easy to remember and the crawlers know instantly what sort of information is found on the page.

SEO Issue #13: Bad or Broken Links

Whether linking to another page on the site or to an authoritative site found elsewhere, those links must work properly every time. Strong links that are reliable and go exactly where they are intended to go do quite a bit to increase the perceived quality of your pages. Unfortunately, things do change and links that once worked fine may lead to nowhere. That can lead to a reduction in the page rankings as well as frustrate site visitors.

A periodic review of all links, internal and external, is a good way to find and resolve links that are no longer working or now redirect to information that has no relevance to your page. Make it a point to hold a full site review at least once a month. Promptly get rid of any links that no longer work and replace them with new ones that function properly.

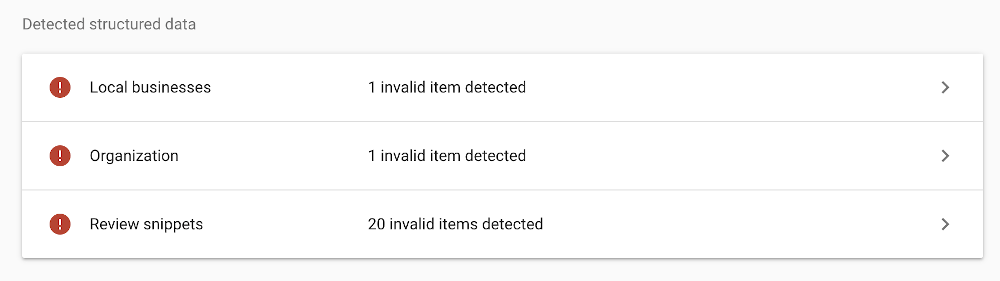

SEO Issue #14: Insufficient or Incorrect Use of Structured Data

What does structured data mean in terms of search engine rankings? It's all about developing and following a uniform way to provide crawlers with data about the page. This makes it much easier for crawlers to classify and categorize each of your pages properly. The outcome is more favourable rankings.

Insufficient or Incorrect Use of Structured Data remains a prevalent issue impacting website visibility and search engine performance. Common errors include incomplete markup, inaccurate schema implementation, and misuse of structured data types.

To address these issues, website owners should regularly audit their structured data implementation, ensuring compliance with Schema.org guidelines and leveraging tools like Google's Structured Data Testing Tool for validation.

SEO Issue #15: Multiple Versions of the Homepage

Earlier, the hazards of having older versions of web pages still active was discussed. That doesn't just apply to the secondary pages of your website or blog. It also has to do with having several active versions of the homepage that in theory should be pointing to the same place.

Here's why this can cause issues in terms of ranking. Search engines may have all of those home pages showing as active. The result is that depending on how people enter the URL, the page visibility for each may be higher or lower. Overall, this reduces the ability to adequately assess the amount of inbound traffic the home page receives.

There are some ways to clear things up a bit. First, if you have http and https versions, get rid of the http at once. It's not doing anything for your reputation, your ranking, or the security of your site visitors.

You can also use software tools to see how many variations are currently indexed and figure out where they direct to. Get rid of any that lead to nowhere or change the data so they redirect to the most recent home page.

SEO Issue #16: Redirecting to Pages Using the Right Language

If your website or blog is aimed at international as well as domestic consumers, the last thing that you need is for them to click on a URL and end up staring at text they don't understand. Since 2011, Google has made it much easier to ensure that users get to see page content that they can read. Knowing as the hreflang tag, this helpful resource signals Google to display the right page based on data about the location of the user or the language used to initiate the search.

Here are some of the most common issues with hreflang tags and how to address them:

- Incorrect Implementation: Ensure that hreflang tags are correctly placed in the HTML header of each page, and that they reference every language variant of the page, including a self-referencing tag.

- Missing Return Tags: Each hreflang tag must have a corresponding return tag on the referenced page. This ensures search engines understand the relationship between the pages.

- Misconfigured Language and Region Codes: Use the correct ISO language and region codes. Incorrect codes can lead to errors and improper targeting.

- Canonical and Hreflang Conflicts: Make sure hreflang tags are consistent with canonical tags. Conflicts between these tags can confuse search engines and lead to indexing issues.

- Using x-default: For pages that act as a default when no specific language is detected, use the "x-default" hreflang tag to indicate this.

Regular audits can help identify and fix hreflang issues before they impact your site's performance.

SEO Issue #17: Missing or Non-Optimized Meta Descriptions

Some may say that meta descriptions are not as useful as in the past. They are not going anywhere soon, and they are still quite important. While the weight of meta descriptions in SEO ranking may not be as significant as other factors, they are still crucial for click-through rates (CTR). Meta descriptions provide a brief summary of the page content and can influence whether users click on the link in the search results.

- Character Limit: The optimal length for a meta description is around 155-160 characters. This ensures that the description is concise and not truncated in search results, providing a clear and complete message to users.

- Impact on SEO: Meta descriptions don't directly influence search engine rankings, but they do impact user engagement. A well-crafted meta description can increase CTR, positively affecting your site's SEO.

- Regular Updates: It's important to keep meta descriptions updated, especially when the page content changes. This ensures that the descriptions accurately reflect the page content and remain relevant.

- SEO Audits: Regular SEO audits are essential to identify and fix issues, including problems with meta descriptions. Ensuring all pages have optimized meta descriptions is a part of maintaining overall SEO health.

In summary, keeping your meta descriptions up-to-date and optimized is an essential part of a comprehensive SEO strategy, and regular site audits can help ensure that all SEO elements, including meta descriptions, are in good shape.

Time for an SEO Audit?

When was the last time that you or a professional spent the time to check site for SEO problems? If you can't remember, that's a sure sign you need to have a full site evaluation done immediately. While many of these SEO issues are simple to fix, someone has to put in the effort to identify and address them. Take your time, check for each one individually, and do whatever it takes to ensure your pages comply with current SEO best practices . You can expect your rankings to improve and see more qualified traffic showing up.