MUVERA: Bridging Speed and Precision in Multi-Vector Search

- Home

- Knowledge Sharing

- MUVERA: Bridging Speed and Precision in Multi-Vector Search

Type a question into Google today, and the answer often feels eerily precise — not just a page with the right keywords, but one that seems to “understand” your intent. This isn’t magic; it’s the evolution of search technology from basic keyword matching to deep semantic retrieval.

Now, Google’s latest research, MUVERA (Multi-Vector Retrieval via Fixed Dimensional Encodings), takes that evolution a step further. It offers a breakthrough approach to combining the rich detail capture of multi-vector models with the speed of single-vector search — a tradeoff that’s long challenged engineers.

For SEO professionals, content strategists, and technical marketers, this isn’t just a new piece of AI jargon. MUVERA has real implications for how your content gets discovered, ranked, and surfaced — especially in competitive niches where contextual depth is the deciding factor. In this article, we’ll break down how MUVERA works, why it matters, and what it means for the future of content strategy .

The Evolution of Search: From Keywords to Context

Search used to be simple — you typed a word, the engine looked for matches, and results appeared. But that simplicity masked a huge limitation: search engines could only match literal keywords, not meaning. If someone searched "best way to improve web traffic" , the system might miss a page titled "Effective SEO Strategies" because the exact words didn’t match.

This keyword-centric approach dominated for decades, from early AltaVista to the first versions of Google. But as user queries became longer, more conversational, and more complex, the cracks began to show. People wanted answers, not just word matches.

The Rise of Contextual Search

The next leap forward came with semantic search — the idea that engines should understand what you mean , not just what you type . This required machine learning models capable of processing meaning, intent, and context. The turning point came with the introduction of word embeddings like Word2Vec, GloVe, and later BERT, which allowed search algorithms to map words into a high-dimensional vector space.

In this space, words with similar meanings cluster together — “car” and “automobile” end up close, “dog” and “cat” are near each other, and “banana” is nowhere near “server rack.” Instead of brittle keyword matches, the system could now find semantically related content.

That’s where vector search emerged as the powerhouse behind modern information retrieval.

The Single-Vector vs. Multi-Vector Challenge

Vector search comes in two flavors — each with its strengths and tradeoffs.

-

Single-Vector Embeddings

A single-vector approach condenses an entire document, passage, or paragraph into one dense vector. This is fast — especially when paired with Maximum Inner Product Search (MIPS) — and is the go-to choice for massive search engines that need sub-second results. But there’s a catch: squeezing all meaning into one vector inevitably loses granularity. If the query relates to a small detail buried deep in the text, the single vector might not “light up” enough to find it. -

Multi-Vector Models

In a multi-vector approach, instead of one representation, the document is broken into multiple vectors, each tied to a key token, sentence, or concept. This makes retrieval far more precise. A search for “Chamfer similarity in 3D shape matching” could directly align with the specific vector representing that concept — even if it’s a niche detail in a long paper.

The downside? Multi-vector retrieval traditionally uses computationally heavy methods like Chamfer matching, which compare each query vector against every document vector. This scales poorly, turning large-scale, real-time search into a bottleneck.

Enter MUVERA: A New Balance of Speed and Precision

Google’s MUVERA research proposes a breakthrough architecture that bridges this long-standing gap. It introduces Fixed Dimensional Encodings (FDEs) to optimize multi-vector representations for fast, scalable retrieval without losing the precision multi-vector systems are known for.

Instead of running slow, exhaustive matching, MUVERA uses optimized indexing and vector projection techniques that allow multi-vector data to be queried at speeds comparable to single-vector systems — a potential game-changer for both web-scale search and niche, high-value content retrieval.

For SEO professionals and content strategists, this means we’re entering an era where search systems can “understand” more of your content, faster than ever before. The technical world calls this scalable semantic retrieval — in business terms, it’s the key to making every sentence of your content discoverable.

Key takeaway: Search is no longer about chasing keywords. The future is about representing meaning in ways machines can retrieve instantly and precisely — and MUVERA may be the architecture that makes this future real.

How MUVERA Redefines Vector Search

MUVERA — short for Multi-Vector Retrieval via Fixed Dimensional Encodings — is more than an incremental upgrade. It’s a rethinking of how multi-vector search can be executed without sacrificing speed or accuracy. Traditional multi-vector approaches bog down at scale because every query vector has to be matched against every document vector, an operation that explodes in complexity as datasets grow.

MUVERA sidesteps that bottleneck with a two-stage retrieval pipeline that cleverly separates the “quick scan” from the “deep analysis.” This architecture means we can now have both lightning-fast lookups and fine-grained semantic precision — a balance that has eluded vector search for years.

The Two-Stage MUVERA Pipeline

Stage 1: Fixed Dimensional Encodings (FDEs) for Fast Retrieval

The first stage is where MUVERA’s elegance shows. Instead of keeping hundreds of vectors per document in the initial search pass, MUVERA compresses all multi-vectors into a single, fixed-length vector — the Fixed Dimensional Encoding (FDE).

This isn’t a crude average or sum. The FDE is mathematically engineered to approximate the original multi-vector similarity space while keeping its dimensionality fixed. This unlocks a major speed advantage: FDEs can be indexed and searched using Maximum Inner-Product Search (MIPS), the same technology that powers ultra-fast single-vector search engines.

The result? MUVERA can sweep through millions of documents in milliseconds, returning a shortlist of high-probability matches without losing much of the underlying contextual richness.

Stage 2: Re-ranking with Full Chamfer Similarity for Precision

Speed is great — but precision is what determines whether the right answer makes it to the top. That’s where MUVERA’s second stage comes in.

Once the system has its shortlist of candidate documents from Stage 1, it applies the full Chamfer similarity computation — the gold standard for multi-vector matching — but only to that small subset. This selective re-ranking process ensures that the final search results reflect the exact semantic details of the query, down to niche concepts that single-vector systems would gloss over.

The genius here lies in the balance:

- Massive computational savings by avoiding exhaustive Chamfer matching on every document.

- Maximum accuracy where it matters — in the top-ranked results.

This two-step process transforms what was once too slow for production into a scalable, real-time solution for multi-vector retrieval.

The Practical Implications for Your Content Strategy

MUVERA is not just a research novelty for AI engineers. It’s a direct signal to content creators, SEO specialists, and digital strategists: the search engines of the near future will be better at finding depth, not just breadth.

Rewarding High-Quality, Nuanced Content

If you’ve been producing rich, multi-layered content — detailed product guides, in-depth industry analyses, or well-researched blog posts — MUVERA tilts the playing field in your favor. With its ability to capture multiple contextual dimensions of a document, search engines powered by MUVERA can surface specific insights buried deep in your text, even if they don’t match the user’s query word-for-word.

That means you’re no longer competing only on surface keyword density. Instead, the conceptual completeness of your content becomes the ranking factor to watch — and can be enhanced through effective keyword grouping .

Enabling Scalable Knowledge-Sharing

For organizations with massive content repositories — think enterprise documentation portals, academic research databases, or e-commerce sites with millions of SKUs — MUVERA’s efficiency is a breakthrough.

With memory savings up to 32x through product quantization, the infrastructure costs of running semantic search at scale plummet. Suddenly, advanced context-aware search capabilities are within reach for businesses that previously couldn’t justify the expense.

From a strategic perspective, this means:

- Faster discovery of niche or long-tail information.

- Better matching between user intent and content depth.

- The ability to maintain search quality even as your dataset grows exponentially.

In other words, MUVERA gives you the technical backbone to make every sentence of your content work harder — without the latency or cost penalties of older systems.

Key takeaway: MUVERA isn’t just optimizing search; it’s reshaping how content visibility works in the age of semantic retrieval. The future winners in SEO will be the ones who produce content rich enough to be fully leveraged by multi-vector systems — and MUVERA just made that future scalable.

The history of search has been a steady climb toward greater understanding of meaning. From keyword lookups to semantic embeddings, and now to MUVERA’s scalable multi-vector retrieval, each step has aimed to bridge the gap between human intent and machine comprehension.

With its two-stage pipeline — fast FDE-based filtering followed by precise Chamfer re-ranking — MUVERA makes it possible to run deep, context-aware search at web scale without prohibitive costs or delays. For those creating nuanced, detail-rich content, this means greater discoverability and more relevant placement in results.

The takeaway is clear: the next phase of SEO and content strategy won’t just reward relevance — it will reward contextual completeness. As MUVERA and similar architectures become integrated into major search engines, every meaningful detail in your content will have a better chance of connecting with the audience that needs it.

Case Study: Before MUVERA vs. After MUVERA

Imagine a user searches for

“how to secure patient data in cloud healthcare apps”

.

Your site hosts a detailed compliance guide with a section deep inside about HIPAA regulations — but those exact keywords don’t appear in the title or introduction.

Before MUVERA (Traditional Multi-Vector Retrieval)

In a single-vector system, the document is reduced to one dense vector. Any subtle section — like your HIPAA compliance advice — risks being “averaged out” and ignored.

In traditional multi-vector models such as ColBERT, each sentence gets its own vector, which improves precision. But here’s the trade-off: computing Chamfer similarity between the query and every vector is too slow for large-scale search. To stay fast, engines often scan fewer vectors, meaning niche content might never make it to the candidate set for ranking.

After MUVERA (FDE + Two-Stage Search)

With MUVERA, the document’s multi-vectors are compressed into a Fixed Dimensional Encoding (FDE) that retains semantic richness.

- Stage 1 : Fast candidate retrieval runs nearly as quickly as single-vector search, so your page still makes the shortlist — even if the relevant section is deep in the text.

- Stage 2 : The short list undergoes full Chamfer re-ranking, ensuring your HIPAA section precisely matches the searcher’s intent.

Result: Highly specific, long-tail queries now have a much better chance of surfacing your nuanced content — even without exact keyword matches in prime positions.

SEO Strategy Changes Based on MUVERA Awareness

Depth Matters More Than Ever

MUVERA rewards conceptual completeness. Long-form, multi-angle content can now rank for granular subtopics as well as broad queries, making structured SEO strategy developmen t more important than ever.

Optimize for Semantic Richness, Not Just Keywords

Rather than repeating keywords, focus on making each subheading, section, and paragraph semantically distinct. Each new vector your document generates is another retrieval opportunity.

Leverage Entity Relationships

Tie related concepts together in close proximity — for example, “HIPAA compliance” + “cloud encryption” + “healthcare app security.” MUVERA’s precision in matching related entities can surface your page for compound queries.

Rethink Thin Pages

Pages that lack depth won’t generate enough vector diversity. Consolidating thin, related pages into rich pillar pages improves multi-vector strength.

Structure for Better Vector Mapping

Use clear headings, bullet points, and follow proven readability strategies . While MUVERA is algorithm-driven, structure helps ensure distinct ideas are encoded into separate, meaningful vectors.

FAQ: MUVERA and SEO

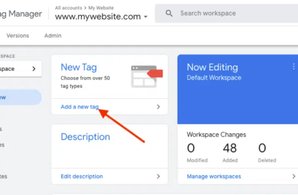

Q: Is MUVERA already part of Google Search?

A: As of now, MUVERA is a research project, but its principles could influence future large-scale search systems.

Q: Will MUVERA replace single-vector search?

A: Not entirely. Single-vector remains efficient for certain queries, but MUVERA’s hybrid model could become standard for complex, context-rich searches.

Q: How should I adapt my SEO strategy for MUVERA?

A: Invest in depth, semantic coverage, and topic relationships rather than keyword stuffing. MUVERA’s strength lies in uncovering nuanced, multi-faceted content — the kind that benefits from

long-term SEO strategy

.

Q4: Does MUVERA improve long-tail keyword targeting?

A: Yes. MUVERA’s ability to preserve contextual detail means highly specific queries are more likely to surface deep, niche content.

Q5: What kind of content benefits the most from MUVERA?

A: Long-form, multi-layered content that covers topics from several angles, including industry guides, case studies, and technical documentation.

Q6: Will thin or single-topic pages still rank well?

A: Less likely. MUVERA favors semantically rich, structured pages. Consolidating thin content into pillar pages increases retrieval odds.

Q7: Does MUVERA reduce infrastructure costs for large websites?

A: Yes. Through Fixed Dimensional Encodings and product quantization, MUVERA reduces memory use by up to 32x, making semantic retrieval scalable.

Q8: How soon should SEO professionals prepare for MUVERA?

A: It’s still research, but aligning content strategies with semantic depth and contextual richness today ensures you’re ready once similar systems are adopted.

Further exploration: