Technical SEO Checklist for 2026: The Prioritized, Actionable Guide

- Home

- Knowledge Sharing

- Technical SEO Checklist for 2026: The Prioritized, Actionable Guide

How to use this checklist (prioritization that actually works)

Most “technical SEO checklists” fail because they treat every item as equal. In practice, technical SEO is a dependency chain:

- If key pages are not indexable → rankings can’t happen

- If duplicates are uncontrolled → signals get diluted

- If templates are slow or unstable → quality and conversion suffer

The simplest prioritization model (Impact × Effort)

Score each issue:

- Impact: Will fixing it change crawl/index/rank/conversion meaningfully?

- Effort: dev time, release risk, cross-team dependencies

Use a 3-tier system:

- P0 (Fix now): blocks crawling/indexing, breaks canonicalization, causes mass 404/soft 404, or removes core content for bots

- P1 (Fix next): noticeably affects discoverability, performance, or templates at scale

- P2 (Optimize): improvements that help, but won’t rescue a broken foundation

You’ll see this structure throughout the checklist.

P0: Crawlability & indexability (Google must be able to see you)

In 2026, this is still the foundation. Even with AI features in Search, Google’s guidance is consistent: follow fundamental SEO best practices and make your content accessible and indexable.

P0 checklist: “Can Google crawl and index my key pages?”

A.

Robots.txt

is not “

noindex

.”

A

robots.txt

file controls crawling, but Google explicitly notes it’s

not

a mechanism to keep pages out of Google; if you need a page excluded from Search, use

noindex

(or protect it with authentication).

What to do

- Confirm that robots.txt is reachable at the correct host root and does not block critical paths (e.g., /products/, /locations/, /blog/).

- If a page should not appear in Search Results, use the noindex meta tag (not robots.txt).

B. Confirm important pages are indexable

- No accidental noindex on money pages, service pages, location pages, or core categories.

- No “indexability broken by template” (e.g., a global noindex bug).

C. Internal links must make content discoverable

Google explicitly highlights making content easy to find via internal linking as a best practice for AI features.

D. XML sitemap is submitted — but treated properly

Sitemaps are useful, but remember: for canonicalization, sitemaps are a weaker signal than redirects or rel=canonical.

A practical way to run P0 in 30 minutes

Create a list of your Top 20 priority URLs (your highest-value pages). For each URL, check:

- Crawl allowed? (robots)

- Index allowed? (noindex meta)

- Canonical consistent?

- Internal links exist?

If any of these fail at scale, stop and fix them before proceeding with “nice-to-have” work.

P0–P1: Canonicals, duplicates, parameters (signal consolidation)

Duplicate URLs are one of the fastest ways to waste crawl resources and weaken rankings. Google explains how it chooses canonical URLs and how you can consolidate duplicates.

Google’s canonicalization hierarchy (the part most checklists skip)

Google describes multiple ways to indicate canonical preference:

- Redirects are a strong canonicalization signal

- rel="canonical" is a strong canonicalization signal

- Sitemap inclusion is a simple way to suggest canonicals at scale (weaker signal)

And critically:

- Google advises not using robots.txt as a canonicalization method.

P0–P1 checklist: duplicates you should control in 2026

A. “Same page, multiple URLs” patterns

- http:// vs https:// (should resolve to one)

- www vs non-www (should resolve to one)

- trailing slash vs no trailing slash (pick one rule)

- parameterized URLs ( ?utm= , ?ref= , filters, sorts)

B. Choose the correct fix

- If a duplicate URL should not exist → use a 301 redirect to the canonical version (strong signal).

- If a variant must exist for users (e.g., sorting/filtering) but you don’t want it in Search → use rel=canonical to the main version (and ensure internal links point to canonicals).

- Use sitemaps to reinforce which URLs matter most, but don’t expect sitemaps to override strong conflicting signals.

C. Keep your signals consistent

Google recommends avoiding mixed signals (e.g., canonical says one thing, internal linking and sitemap suggest another).

D. Internal linking should prefer canonicals

Google explicitly advises linking to canonical URLs rather than duplicates.

P1: Site architecture & internal linking (discoverability and control)

For small and mid-sized businesses, this is often the “quiet” technical win: fixing architecture doesn’t just help crawling — it improves conversions and content discovery.

P1 checklist

- Critical pages within a few clicks: Your top services and top converting pages should not be buried.

- No orphan pages: pages that exist but have no internal inbound links (hard to discover reliably).

- Breadcrumbs: improve navigation clarity; if implemented, ensure they match the real hierarchy.

Google’s AI features guidance also reinforces the importance of making content easy to find via internal links.

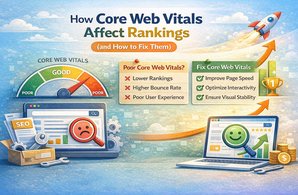

P1: Performance & Core Web Vitals (what to measure and what to fix)

In 2026, performance isn’t “optional.” Google recommends achieving good Core Web Vitals to improve search performance and the overall user experience.

Core Web Vitals include LCP, INP, and CLS.

What thresholds should you target?

Google’s Web Vitals guidance defines “good” thresholds for each metric (commonly referenced across CWV documentation):

- LCP : 2.5s or less (good)

- INP : 200ms or less (good)

- CLS : 0.1 or less (good)

P1 checklist: the fixes that usually move CWV the most

A. LCP (loading performance)

- Optimize the largest above-the-fold element (often hero image or headline block).

- Reduce render-blocking CSS/JS on key templates.

- Improve server response time for primary pages (hosting, caching, CDN).

B. INP (interaction responsiveness)

- Reduce main-thread blocking JS.

- Delay or remove heavy third-party scripts where possible.

- Make interactions lightweight on mobile.

C. CLS (visual stability)

- Reserve space for images, embeds, and dynamic components.

- Avoid inserting banners above existing content without layout reservation.

How to operationalize CWV (without endless debate)

Pick your top 3–5 templates (home, service, category, blog, location). Fixing templates scales improvements across hundreds or thousands of URLs.

P1–P2: JavaScript, rendering & content availability

Many SMB sites use modern frameworks, heavy page builders, and client-side rendering patterns. This is fine — until key content is only available after complex JS execution.

Google provides dedicated guidance on JavaScript SEO basics.

P1 checklist: confirm critical content is “available to Search”

- Is the main content present in the rendered HTML Google sees (not just “loading…” placeholders)?

- Are internal links discoverable in rendered output?

- Are titles, headings, and primary text visible without user interactions?

Dynamic rendering: understand what it is (and why it’s not ideal)

Google describes dynamic rendering as a workaround for cases where JS-generated content isn’t available to search engines — and explicitly notes it’s a workaround, not a recommended long-term solution.

What to do in 2026

- Prefer rendering approaches that deliver content reliably (server-rendered output or pre-rendering) where feasible.

- Use dynamic rendering only as a temporary bridge if you’re otherwise blocked.

P2: Structured data (schema) that matches what users see

Structured data can help search engines understand your content, but the implementation must be honest and aligned with what’s visible.

Google’s AI features guidance stresses that structured data should match visible text — and also notes you don’t need special markup to appear in AI Overviews/AI Mode.

P2 checklist (safe, high-ROI schema)

- Organization / Website schema where appropriate

- Breadcrumb schema if you use breadcrumbs

- Article/BlogPosting on content pages

- FAQPage schema only when the FAQ exists on-page (and answers are visible)

Do not

- Mark up content users can’t see

- Add FAQ schema “just for SEO” if no FAQ is present (risk and low long-term value)

P1: International SEO essentials (hreflang for US/Canada scenarios)

If you target both the US and Canada — or Canada with English/French variants — hreflang mistakes can cause the wrong page to appear in the wrong region or language.

Google’s localized versions ( hreflang ) documentation:

- Explains that hreflang helps Google understand localized variants

- Notes Google doesn’t use hreflang or the HTML lang attribute to detect language; it uses algorithms — hreflang is for variant mapping.

P1 checklist: hreflang implementation rules that matter

- Use one supported method (HTML tags, HTTP headers, or sitemaps).

- Ensure alternates are correctly mapped (especially for en-CA / fr-CA where relevant).

- Keep canonical + hreflang consistent (don’t canonicalize different locales into one URL without strategy).

P0–P1: Migrations & redesigns (the “don’t lose traffic” playbook)

Migrations are where technical SEO becomes business-critical. Google provides explicit guidance for moving a site with URL changes and minimizing negative impact.

P0 migration checklist (URL changes)

- Prepare the new site (staging and QA)

- Build a URL mapping (old → new)

- Implement redirects from old URLs to new URLs

Domain moves: Use Change of Address correctly

Google’s Change of Address tool is intended for moving from one domain/subdomain to another — and should be used after you’ve moved and redirected your site.

A practical “migration safety net.”

- Crawl the old site and the new site pre-launch

- Validate redirect rules with a representative sample

- Post-launch: monitor indexing + crawl anomalies daily for 2–4 weeks

AI readiness (what’s real vs. hype in 2026)

There’s a lot of noise around “AI SEO,” but Google’s stance on AI features is unusually direct:

There are no additional requirements to appear in AI Overviews or AI Mode, and no special optimizations are necessary beyond fundamental SEO best practices.

So “AI readiness” in technical SEO looks like:

- Your pages are crawlable and indexable (P0)

- Your canonical signals are clean (P0–P1)

- Your content is accessible and easy to find via internal linking (P1)

Optional: controlling non-Google AI crawlers

If you care about how AI products crawl your site, OpenAI documents its crawlers and how webmasters can manage them via the robots.txt user-agent directive (for example, OAI-SearchBot and GPTBot).

Optional/experimental: llms.txt

The /llms.txt file is a proposal to standardize how LLMs interact with a website during inference. Treat it as experimental — not a ranking requirement.

Common mistakes that hurt rankings the most

- Blocking crawling with robots.txt and expecting it to behave like noindex (it doesn’t).

- Using inconsistent canonical signals (canonical says A, internal links and sitemap push B).

- Leaving massive parameter duplicates indexable without a strategy.

- Shipping a redesign without URL mapping and redirect QA.

- Chasing “AI tricks” instead of meeting basic Search eligibility (crawl/index/snippet).

FAQ

Do I need special technical optimization to appear in Google AI Overviews / AI Mode?

No, Google states there are no additional requirements or special optimizations beyond fundamental SEO best practices and being eligible for Search.

Is robots.txt enough to keep a page out of Google Search?

No, Google explains robots.txt is primarily for crawl control and not a mechanism for keeping pages out of Google; use noindex or access protection instead.

What’s stronger: rel=canonical or a redirect?

Google treats both redirects and rel=canonical as strong canonicalization signals; sitemaps are weaker.

What are the “good” Core Web Vitals targets?

Google’s Web Vitals guidance defines “good” thresholds for LCP, INP, and CLS.

How should I handle a site migration with URL changes?

Google recommends preparing the new site, creating URL mappings, and implementing redirects from old URLs to new ones. For domain moves, use Change of Address after redirects are in place.